torchtext预处理自定义文本数据集 1.设置 导入必要的包:

1 2 3 4 import torchdata.datapipes as dpimport torchtext.transforms as Timport spacyfrom torchtext.vocab import build_vocab_from_iterator

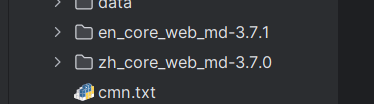

下载分词的模型:

加载模型:

1 2 eng = spacy.load("en_core_web_md-3.7.1" ) "zh_core_web_md-3.7.0" )

加载数据集:

1 2 3 4 FILE_PATH = 'cmn.txt' iter .IterableWrapper([FILE_PATH])iter .FileOpener(data_pipe, mode='rb' )0 , delimiter='\t' , as_tuple=True )

在第 2 行,我们正在创建文件名的可迭代对象

在第 3 行,我们将可迭代对象传递给 FileOpener,然后 FileOpener 以读取模式打开文件

在第 4 行,我们调用一个函数来解析文件,该函数再次返回一个元组的可迭代对象,表示制表符分隔文件的每一行

DataPipes 可以被认为是一个数据集对象,我们可以在它上面执行各种操作。

1 2 3 4 5 def removeAttribution (row ):return row[:2 ]map (removeAttribution)

map 函数可用于对data_pipe的每个元素应用一些函数。

分词:

1 2 3 4 5 6 7 def engTokenize (text ):for token in eng.tokenizer(text)]return engTokenListdef zhTokenize (text ):return [token.text for token in zh.tokenizer(text)]

2.建立词汇表 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 def getTokens (data_iter, place ):for english, chinese in data_iter:if place == 0 :yield engTokenize(english)else :yield zhTokenize(chinese)0 ),2 ,'<pad>' , '<sos>' , '<eos>' , '<unk>' ],True '<unk>' ])1 ),2 ,'<pad>' , '<sos>' , '<eos>' , '<unk>' ],True '<unk>' ])print (source_vocab.get_itos()[:9 ])

source_vocab.get_itos() 返回一个列表,其中包含基于词汇的索引中的标记。

3.使用词汇对句子进行数字化 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 def getTransform (vocab ):1 , begin=True ),2 , begin=False )return text_tranformdef applyTransform (sequence_pair ):return (0 ])),1 ]))map (applyTransform)

4.制作批次 通常,我们分批训练模型。在处理序列到序列模型时,建议保持批次中序列的长度相似。为此,我们将使用 data_pipe 的 bucketbatch 函数。

1 2 3 4 5 6 7 8 def sortBucket (bucket ):return sorted (bucket, key=lambda x: (len (x[0 ]), len (x[1 ])))4 , batch_num=5 , bucket_num=1 ,False , sort_key=sortBucket

data_pipe中的一批是 [(X_1,y_1)、(X_2,y_2)、(X_3,y_3)、(X_4,y_4)]

因此,我们现在将它们转换为以下形式:((X_1,X_2,X_3,X_4), (y_1,y_2,y_3,y_4))。为此,我们将编写一个小函数:

1 2 3 4 5 6 def separateSourceTarget (sequence_pairs ):zip (*sequence_pairs)return sources, targetsmap (separateSourceTarget)

5.填充 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 def applyPadding (pair_of_sequences ):return (T.ToTensor(0 )(list (pair_of_sequences[0 ])), T.ToTensor(0 )(list (pair_of_sequences[1 ])))map (applyPadding)def showSomeTransformedSentences (data_pipe ):""" Function to show how the sentences look like after applying all transforms. Here we try to print actual words instead of corresponding index """ for sources, targets in data_pipe:if sources[0 ][-1 ] != 0 :continue for i in range (4 ):"" for token in sources[i]:" " + source_index_to_string[token]"" for token in targets[i]:" " + target_index_to_string[token]print (f"Source: {source} " )print (f"Traget: {target} " )break

返回结果:

1 2 3 4 5 6 7 8 Source: <sos> <unk> ! <eos> <pad>